Centralized control Based on NDI 6 Analysis of NDI technology

- author:

- 2024-06-06 09:14:13

Recently, NDI 6 has continued to become the focus of heated discussions in the market. In the previous video program, the manufacturer introduced NDI 6 and its applications in related industries, and shared his exclusive views. When these videos were released on international platforms, although overseas audiences could not directly understand the Chinese content, with the help of subtitle translation, they showed unprecedented enthusiasm and high attention to the manufacturers 'exclusive professional reviews. Based on this, we specially invited the manufacturer today to once again explain in depth the new ideas and viewpoints he has generated during this period.

Manufacturer: Last time, it was actually quite simple. It roughly mentioned two new features of NDI 6: one is HDR, and the other is NDI Bridge. It just described some basic concepts. I didn't expect it to receive such good responses, so I feel honored today. Have the opportunity to have another in-depth conversation.

(Question):Where is NDI 6 new? For example, the first aspect we are concerned about is that NDI 6 has recently added support for HDR. From a technical perspective, what substantive and leap forward is this?

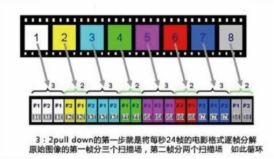

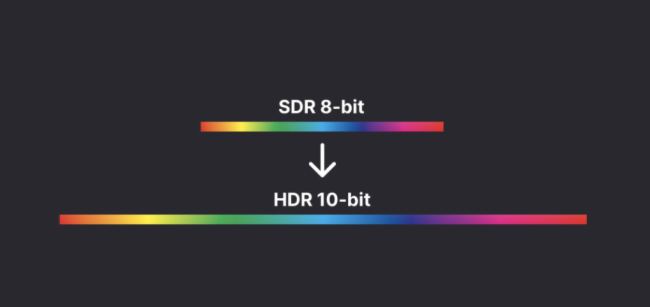

(left):Well, HDR is actually not a new concept. It should be said that more than ten years ago, in our photography and display technology, we talked about the concept of high dynamic range or simply wide dynamic. In fact, HDR has been a relatively mature technology for a long time. Here is a more critical technical detail: that is image. If we want to achieve a wide color gamut range and good dynamic performance, then we face a basic problem: that is, during the video collection process (including compression, transmission and storage), because we are limited by some technologies, which may include transmission, storage bandwidth, etc. When 8 bits are selected, 8 bits are 2 to the eighth power, with 256 color levels, and there can be 256 steps during color transition. These 256 color levels can only represent a limited color range. If you want to present very delicate details, it may cause some problems.

In addition, when we talk about HDR, it involves brightness, and of course, when color is displayed, there is a concept called gamma curve. If we expand it, many details may be involved. Simply put, 8 bits are difficult to present a high dynamic range. This time, NDI 6 supports HDR, which is a key technological breakthrough.

We can support image compression up to 10 bits, 12 bits and even 16 bits, including transmission. This matter may sound simple, but it is actually quite difficult. Technically, we know that video needs to be compressed. First, the image must be collected and stored, and then the image must be compressed through CPU or FPGA operations and processing. The first obstacle in this process is storage.

Although changing from 8 bits to 10 bits adds two more bits, which seems to only increase by 25%, when this 25% of the data amount is stored, it will consume a lot of memory (because we know that the memory is based on 8 bits is one byte, so if we want to store 10 bits, this means that we may not simply have two more bits, but may just need one more byte). Therefore, the bandwidth of our memory access may increase exponentially. At the same time, the increase in bandwidth means that there are extremely high requirements for the computing performance of the processor, and the difficulty of the entire process will be greatly increased. Therefore, solving the performance improvement from 8 bits to 10 bits is actually not an easy technical threshold to cross. That's why (the industry) has so far chosen more 8-bit on IP Internet.

(Question): For example, what constraints do our devices encounter in terms of HDR support, and how do we overcome these difficulties?

(Left):As we just mentioned from 8 bits to 10 bits, we actually have a series of obstacles, one after another. First of all, let's take HDMI as an example. For video captured by HDMI, in previous methods, our compressed image processing was realized based on 8 bits. So it turned out that when we chose the chip design plan, we only considered 8 bits more. But now we have to support 10 bits, 12 bits and even 16 bits; so when selecting chips and matching hardware, this is the first problem that needs to be solved. Of course, this is not too difficult, because they are all mature technologies.

The second step is the collection and temporary storage of images in memory, which is our buff cache.

In this process, there is a challenge, as mentioned just now: bandwidth is the biggest obstacle. Our data must be cached in memory first, and then extracted from memory for further compression processing. Let's imagine the brightness in the middle of a pixel. If it's one byte, I'll just store it in one byte. But I have higher bits now, so I might need two bytes, which means the entire bandwidth is doubled (multiplied by 2). However, because we know that chip technology has its ability limit on this problem; the memory access bandwidth also has its upper limit. Especially in the process of image processing, it is not as simple as we say we want to put it in and take it out. Because there are many links in it, we may have to adjust the color and process the data. For example, for video, we need to perform processes such as zooming in and out, and de-interlace, so it is an iterative process, from taking it out and saving it in, to taking it out and saving it again. The design of many of our original chip solutions took into account that only 8 bits were needed, so only so much bandwidth was needed, but now it is double, which means that we need to think about many ways to optimize some algorithms in the selection of solutions, chip selection, and even our image processing.

The third isvideo compression.

We know that NDI compression has two codecs (encoding methods), one of which is SpeedHQ and the other is H.264/H.265. As for SpeedHQ's handling method, we know that the official would recommend using software to handle it. But don't forget that our bandwidth has been double, which means that our processing may also be double. Of course, note that what I said is possible, not necessarily so. This is also a challenge that NDI 6 has made breakthroughs this time. If my computing performance also needs double, it means that many hardware, such as hardware that could do the previous 8-bit processing, may not be able to do it with 10-bit or higher bits now. But in fact, according to what the official NDI team said, its performance consumption is not as high as we thought. This is also a technical optimization.

Back to our question. If our original implementation solutions do not have the processing capabilities to cope with such new and higher bandwidth, this means that many of our products may not be able to support NDI6. When manufacturers were planning a new generation of products in the early stages, they had already made a lot of preparations in this regard, so the processing performance of the chip we chose and its bandwidth capabilities (both meet the requirements), especially the implementation of SpeedHQ,(Our hardware choice) is also based on FPGA processing. Another point is that, for example, our N50/N60 model products have the inherent ability to encode and decode 10 bits for H.264 and H.265 compression and decompression.

Because of our advance preparation and prediction, we are almost the fastest in our support for NDI 6, from NDI 6 release to response. Of course, when NDI 6 first came out, we were the only company in the global market at that time that could support NDI 6. There must be the logic we just talked about behind it: although the choice of hardware is very simple, in the modulation of software and testing of a large amount of code, manufacturers have strong technical accumulation. quot;

This is one aspect. Of course, maybe we should be modest. In fact, we should have some earlier predictions about the future development direction of video technology. Therefore, we will make some more adequate preparations in advance based on the choice of chip solutions and some technical foundations. prepare.

(Question): In addition to improving our visual experience in supporting HDR, what other problems can NDI 6 help us solve?

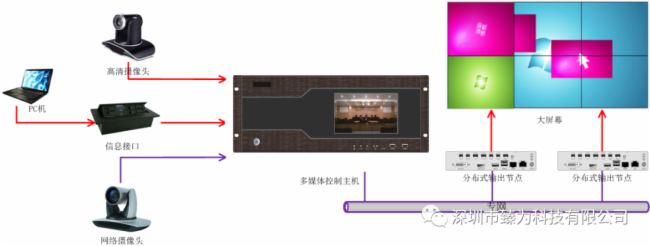

(left):This is a good question. In fact, although HDR is a relatively mature concept, in fact, our end users 'expectations or judgments on it seem that they are not so strong at this stage. This is also telling the truth. Because when most of us use our Mobile device to watch videos, there seems to be no particularly strong demand for this experience. But we should look at more and wider industries and fields. For example:digital signage , this kind oflarge-screendisplay. They may pursue better, richer color displays. Therefore,(NDI HDR) may bring better technical support to all walks of life, and may also bring better experiences and more business opportunities to all walks of life.

Second, we can imagine it again, for example, in the medical field. We know that medical care has very high requirements for image quality. Imagine that when we perform surgery, the color of blood vessels and muscles we see may be manifestations of some symptoms. If the color (display) is not enough to express these details, it is very likely that it will affect the doctor's judgment. Because there are 10-bit or higher color representations, it can bring more help to these areas. Let's imagine again,(of course) there may be many users who have (already) raised it. For example, when we project a computer onto thescreen, some users often feel that the image has been compressed (of course not NDI, but other technology), and the colors presented on the screen are obviously worse than before the compression. What is the reason? Because this compression process and our original image may be 10 bits or even higher, but when collecting and compressing, its bits are reduced, which means that many details are lost. After restoration, these details have been lost, so they have an impact on the experience. Therefore, NDI 6 has added higher-bit support, which not only brings improvements to the visual aspects of streaming media, but also brings improvements to more industries. For example, the medical industry was originally restricted by technical conditions, and technical means were not enough to support them in making applications. Now it has become possible, so NDI 6 will bring changes to the application of many industries and bring infinite possibilities to more industries.

(Question):What practical problems can NDI Bridge help us solve?

(left):In fact, when we work in the media industry, and of course other industries, we all have a pain point, that is, how to cross the Internet when we do real-time live broadcasts or real-time media transmission. Crossing the Internet is easy to say because we feel that the Internet is now very developed; but the transmission of streaming media will bring many challenges across the Internet. The first problem is latency, the second problem is whether your bandwidth can meet high-quality image transmission, and the third problem is whether it is safe enough, especially for professional scenarios. Even if these problems are solved, because we are facing radio and television media, news editing, etc.(industries). We often have many (signal) streams under these scenarios, not just one stream, but how can these streams be guaranteed at the same time or even synchronized? How to get from one place to another across the Internet? And whether the entire process is simple enough that we will not require a large number of complex equipment, complex systems, or tools to help us achieve these goals. So when you think about it, these issues seem very complex.

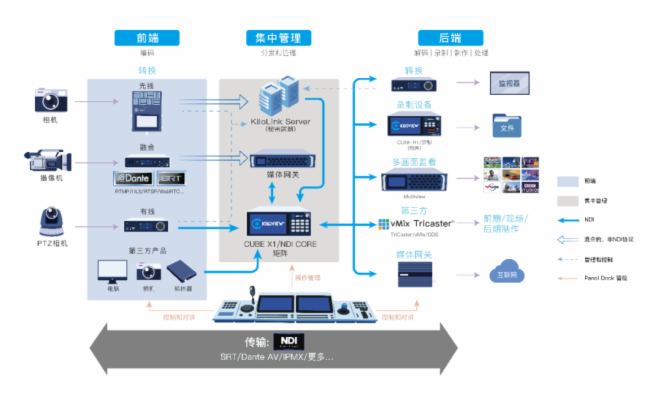

So in the current market, there will be many solutions, such as SRT, cloud-based solutions or other solutions that we are familiar with. But in order to solve these problems, new problems will arise. For example, we convert NDI into SRT, use the Internet to transmit it, and many people are doing this. But when the local NDI becomes SRT from the Internet, but the studio is still NDI, how can we switch back to NDI at this time?

In this process, different technical standards are encountered and converted over and over again, which will have an impact on latency and image quality. And it will also cause some unpredictable problems during conversion, which is a very troublesome matter in general. NDI was actually talking about it many years ago. I remember there was a slogan when NDI 5 was released. In fact, for so many years, the government has been focusing on such a theme to achieve and achieve goals. That is: the whole world is yourstage and your studio. This means that anywhere, as long as you can connect to the Internet, you can use NDI technology to transmit signals from any corner of the world to your studio. This is its philosophy. Now NDI Bridge(Utility), I think it has achieved this goal.

NDI Bridge actually existed in NDI 5, but at that time it was just an official specific tool and could only be used in Windows. Now (officially) putting it in the SDK means that any device can use this tool and utilize its technology. NDI Bridge helps us pass from any end of the Internet to this end of your studio. We no longer needed additional conversions for this process, and NDI Bridge helped us solve this problem. Therefore, NDI's videos are still NDI from one place to another, and it can continue to enjoy some of the conveniences of NDI. For example, automatic discovery and plug and play. Because there is no repeated conversion from one system to another in the process, it is more efficient and has lower latency. Its reliability is also more guaranteed, including NDI, all transfers of metadata like MetaData, including Tally, PTZ control, camera control and everything are all connected on Bridge to help us get through. More importantly: it's free. It's free to all users, so we've really reached the slogan it originally promoted: the world is your studio. As long as you connect to the Internet, NDI can help you get where you want from anywhere in the world, which is amazing.

That is to say, in fact, based on the ecosystem it originally built, through the release of NDI 6, NDI connects all links more simply and quickly without paying extra costs. No additional conversions are required while being safer and more synchronized. I think it is also in line with a vision of our manufacturer Software Driven from a technical perspective. I think that manufacturers, as official partners of NDI, are highly consistent in this regard.

(Question): For example, from the perspective of the performance, improvement, or long-term development direction of NDI6, what ideas would you have from the manufacturer's perspective? Does NDI 6 have such a term as backward compatibility?

(left):NDI 6 It is definitely backward compatible. NDI was before NDI HX, because it was still very immature at that time, there may be compatibility issues such as the NDI HX1 and our current HX2 and HX3. But since NDI 4, it has remained backward compatible, so there are no problems in this regard. Of course, there is one issue that deserves our attention. That is, we just discussed HDR, because after adding 10-bit or higher video compression and decompression support, HDR has some compatibility issues with the original non-HDR codec parts. At least what we can judge is that image coding and decoding of HDR videos is definitely not supported on the old SDK version. So there may be some such minor compatibility issues in future applications. But these are things we can predict, so it will not have a particularly serious impact on us.

(Question):Is it possible for us to predict some future directions based on our judgment of official technology?

(Left):The earliest concept of NDI, as mentioned just now, is highly consistent with the concept of our manufacturer's early company development. This is why we can stay together for a long time. The core concept of NDI 6 is actually Software Driven Video: Software Driven Video. Therefore, NDI6 has always pursued that all problems can be solved through software, rather than relying too much on hardware and chips. Of course, this is difficult. Because we just mentioned that, for example, many products of manufacturers are implemented based on FPGAs, and FPGAs are essentially hardware. We have to rely on hardware support to solve this problem. This is due to performance or some special factors. Consider; But NDI itself can completely compress and decompress video through software: all our transmissions can be based on software and IP protocols. With this kind of transmission, we can gain several advantages because of the software. The first is: flexibility, enough flexibility. In our words, we are not picky. (Will not appear) You can only do it on this kind of hardware, you cannot do it on that kind of hardware. Software As long as it is a universal CPU, universal operating system and software technology, everything can be realized. So it is not picky and has enough flexibility.

The second is cost, which is also critical. If we're going to use FPGAs, of course the cost will be higher. But if I had a general-purpose CPU, an ARM-based processor, it would be very easy to get. That's because in this big ecosystem, these processors are cheap enough that we can implement them very cheaply. More importantly, it can also be completely independent of hardware. This is what NDI now allows everyone to see, and NDI is implemented on any operating system (Windows, Linux, Mac OS). This is also an advantage. It is easy to develop the ecology, so we see that NDI's current ecology on AV over IP is the most complete and sound. For example, we see software such as Microsoft and Adobe, as well as many (other) software companies, who can support and embrace NDI quickly, promptly and actively. Because (NDI) itself is a software technology that does not require complex adaptations and has no hardware requirements; in addition, NDI's own processing (requirements) consumes very low CPU, it is implemented Very easy and there are no specific obstacles. In fact, if we compare many other AV over IP technologies, such as STR and IPMX, or if we look at IP technologies such as SDVOE, although they say it is AV over.

TAG: